Why Counting by Hand Still Rules in Operations Management

In an age of scraping data from websites, advanced business intelligence, and abuse of innocently resting RESTful APIs, as a professional manager and operations consultant to tech companies, I still believe in counting things things hand.

In my professional life, I’ve basically always been a consultant. For me, this has meant embedding myself in a company and working on one problem that nobody else has time for, because they’re fighting daily fires just to stay alive, but the solution for which will mean life or death for the company.

Consulting has included, just to include a few random examples:

- For a scooter company: How can we reverse trend of declining numbers of scooters on the road?

- For a car rental company: How can we make sure people pick up their car and leave within 30 minutes of arriving, instead of four hours?

- For a ride share service centre: How can we make sure people are so happy with our service that they’d rather visit us than use email?

I do occasional operations consulting for tech companies that have physical assets (like vehicles, warehouses, and workshops). See here for more info.

To answer all these questions, I needed data. There are two kinds of data I needed: a) the kind I download from systems and databases, and b) the kind I can only get by visiting a place, seeing things in person, and nearly every time, counting them by hand.

Here’s why I still count by hand.

Counting by Hand — In Summary

Here’s a brief reason why I count by hand, and don’t just rely on databases, scraped data, and whatever APIs give me.

- Data that’s automatically gathered by engineers isn’t designed to answer operational questions. Often, engineers just log everything; but I may need something else.

- Data is often unreliable (usually in how its captured), and easy to second guess.

- People massage the context of analysis, or how it’s presented, to tell their own story and push their own agenda, so data often tells the wrong story.

- Data is less memorable than quotes from people, and aren’t as good at convincing.

Let’s look at more detail below.

First reason I count by hand: Data (that’s available) often doesn’t answer operational questions.

I’m talking here about data that’s “readily accessible”. The stuff that startups store because, well, engineers make databases, and so they store data.

The problem is, data collected by engineers (and much less their visual representation) isn’t designed for solving operational problems. Most data systems are built by the same people who build the software. It’s designed for capture, manipulation, and display of user and application data.

So for example, in the scooter company I was consulting to, it was very easy to get information like

- Where are the scooters right now?

- Which scooters are low battery?

- How many scooters are offline?

But it’s harder (or impossible) to answer questions like:

- How many scooters were stolen last week?

- How many scooters had that weird bug with the communications module?

- How many scooters had motor issues?

None of that information was collected, much less tracked. If a scooter was stolen, it’d be marked as “lost”, a catch-all for a variety of statuses. That’s all you need for the app to work. But it’s not enough to run a profitable business: understanding why scooters are lost or stolen, where, how often, and how to prevent it.

So to identify scooters as stolen, we had to use a bit of human intelligence. We’d look at the data, combining elements like if a scooter has been offline for a few days, nobody has found it at its last location, and it’s in a sketchy area, well… it has probably been stolen.

This then goes into a Google sheet, i.e. a “human database”. (Because where else do you store data?). And it was on the basis of that analysis of analysis of data that we were able to make some decisions.

Ultimately, though, we’d have been better off if we started with the data we’d need to run the business, and collected that.

Second reason I count by hand: Data is often unreliable, and easy to second-guess.

Of course data is perfect. It captures a state in its entirety.

The problem is really with us humans.

The first problem with data is we often just don’t give systems data. For example, in a motorcycle workshop, in theory you should log all activities involving repairs. This BMW model came in, and it had these problems, and took this time, and needed these parts, and these were resolved, then these tests passed.

Nobody does all of this. There are many reasons, but it generally comes down to “trained laziness”.

I don’t mean people are lazy inherently. We’re just less inclined to do things that have no apparent value. If counting something seems (and often is) like busy-work, people don’t do it. And most data entry seems like that. The explanation from the bean counters of “we need good records” just isn’t enough.

In the above example, a good explanation for counting errors is “so we can see what more parts we have to order in advance, so we’re never caught short-handed”, or “so we can make sure our mechanics aren’t overworked, and we can decide to hire more if necessary”.

The second problem with data is we (as analysts) don’t know how to talk to data systems. I’ve seen countless analysts (including myself) tripped up by things like queries with errors in them, chart formatting mistakes, or simply querying the wrong things.

Compounding that, I often don’t know the weird intricacies of how data systems are built. This includes everything from

- How a data system gets its data

- Definitions for statuses

- How often data is updated

For example, early in a project at Lyft I was trying to figure out survey response scores for some of our physical sites. How did people get the surveys? They got them by text message. And how were they sent the text message? By the agent.

This meant that whether we’d get a reply would depend entirely on

- Whether the customer had an account with us with their phone number

- Whether the agent said “Hey, I’m sending you a survey – please fill it out!”

- Whether the agent even bothered to send the survey (or intentionally didn’t)

In other words, the data was riddled with inconsistency. It’s part of the reason I set up the automatic SMS sending software that became known as the “Dana Server” at Lyft.

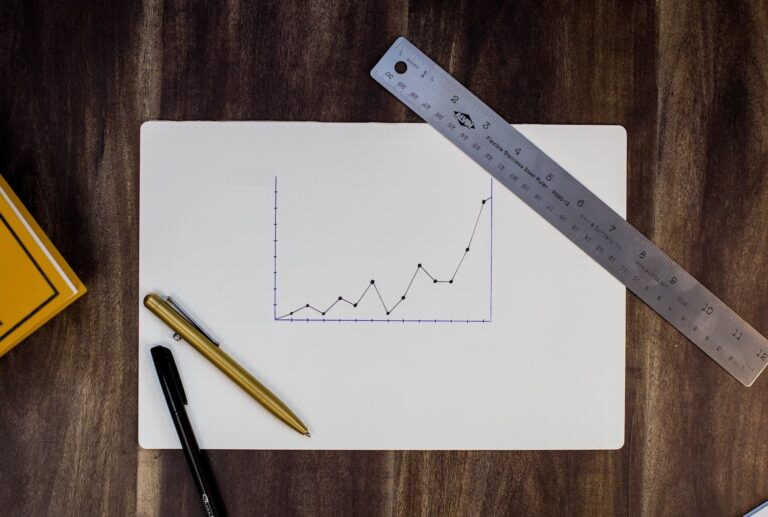

Third reason I count by hand: Someone is always massaging data to tell their story.

Shockingly, data is manipulated.

People who have never really been much around science like to think that science is some perfect universe, where numbers tell an entire story.

But just spending a little time in the science world, you’ll realise that scientists feel comfortable with saying things like “margin of error”, “certain within a reasonable range”, “in a statistically significant sample of cases”.

There are so many problems in all of this. There’s even a large scientific movement against the abuse of statistical significance.

Look at this same statistic presented in a few ways:

- Last year, 200 people died in Australia in traffic accidents, whereas 15,000 died of respiratory illnesses: 75 times as many.

- In the past twelve months alone, only one in one hundred thousand people died in traffic accidents.

- Since last October, the equivalent of four full bus-loads of people have died in avoidable traffic accidents.

- More than a half of the 200 people in Australia who died last year in traffic accidents were innocent passengers, of whom 40% were children.

Basically, the same basic statistic can be shown in many different ways, depending on your agenda. Science isn’t pure science; it’s just a tool for organising communication with humans, and we are organic beings governed by emotions.

Fourth reason I count by hand: Data is always less memorable than people.

Pictures tell stories. Quotes from people tell stories. But data is always boring.

This depends a little on the person. Some are convinced more by numbers; some are convinced by anecdotes. This requires judgment.

But at very least, data is best backed up by a visual, a real description, or an anecdote.

A “manually counted” bit of data always speaks more strongly.

For example, when comparing market share of two scooter companies, you can either plug into the API of various companies and scrape it, or you can go out and see customers using scooters.

This lets you tell a story like: “I went out on the street and watched scooters. I counted 40% using private scooters, and of the remaining, 50% were Lime. I then asked them why they liked Lime and…”

We actually did this, and the results were enlightening. Short answer why people use Lime: they were first, they’re everywhere, they’re fast, and they’re comfortable.

The general concept of “counting things” extends to asking people questions. Getting direct quotes from customers is a little harder than just reading a chart, but the ROI is incredible. Because it lets you add flesh and blood to cold hard numbers.

For example, which do you think is most powerful?

- “Over 75% of users prefer our new model.”

- “Over 75% of users prefer our new model. I went out and asked some why, and out of fifty respondents, 35 said that they liked the new model for either being faster or sturdier looking than the competition. One said ‘It just looks tougher than the others.'”

Or which of these two:

- “Three fifths of drivers who come in with a pay issue are satisfied with the outcome.”

- “Yesterday we served thirty individual drivers with pay issues. They took longer, but three fifths were happy with the outcome. One wrote in her survey response: ‘I wish it never happened, but I appreciated the time Sandra took to fix my issue.'”

When management is presented with numbers, they understand it, but it’s anecdotes that they really connect with. When they share anything with others, it’s either one very high-level statistic, or quotes and stories.

Final reason I count by hand: People see you counting and it creates change

If you count by hand, or ask other people to, it repeatedly re-emphasises the importance of whatever you’re counting.

In one project, I started asking a couple of questions

- How many scooters were broken today?

- And how many did you fix?

On day one, the people said I don’t know, and made up a ballpark number. I drilled a little further for specifics. Days two through thirteen weren’t much better. Often, they’d count what they had in front of them and try to remember what was happening before. Sometimes the person wouldn’t even be in the office and would go off memory altogether.

But after about two weeks, it started to change. They realised I wasn’t going away, and that they needed to have some ready answers for me when I asked.

My goal wasn’t arbitrary counting by the way, it was just to make sure we were fixing at least as many as were breaking, to take down the inventory in the warehouse. The team knew this. When they had the numbers in front of them and saw they were achieving this goal, they knew they were on track.

Final thoughts

“Counting stuff by hand” is so powerful and so simple that it’s almost my mantra. Even in the digital world it’s a metaphor. Pick a metric that describes the problem, and go and get the information I need to fix it.

If that information is digital, I’ll get the data into a Google sheet and analyse it myself — not be content with a BI analyst doing it for me. It’s more a mindset of taking responsibility for the information that makes up our decisions.

Try it… I’m curious to know how it works for other people.